Quantum computers promise to use the strange laws of quantum mechanics to process information in new ways. However, promises cannot run algorithms. Algorithms require real quantum hardware, and building practical, large‑scale quantum hardware demands a concrete, feasible roadmap.

Real quantum hardware is not perfect: each component introduces small errors that build up as the number of components grow. A quantum computer that can operate correctly even in the presence of these errors is called fault-tolerant. Achieving fault-tolerance represents the shift from an experimental curiosity to a universal, scalable technology.

Recently, Sparrow Quantum, in close collaboration with researchers from the Niels Bohr Institute at the University of Copenhagen, developed a practical blueprint for a fault-tolerant photonic quantum computer. A photonic quantum computer uses photons, the fundamental particles of light, to perform calculations. The blueprint uses our core technology, quantum dots, as on-demand sources of photons. The blueprint’s architecture addresses three challenges that fundamentally limit photonic quantum computing:

Photons are, understandably, very important to a photonic quantum computer. The gold standard in photon quality is to have both pure and bright single photons. Pure means having one and only one photon at a time, while bright means having a high rate of photons with low loss. There are two distinct types of single-photon sources, probabilistic and deterministic. Probabilistic sources produce single photons randomly with some rate. However, due to this randomness, they also sometimes produce two photons. They face an intrinsic trade-off between creating any photons at all and creating two photons. Increase the rate of single photons and you get more two-photon pollution. Decrease the rate and you get many fewer single photons. That is, there are no bright and pure heralded single photon sources.

Many quantum protocols are designed to work with exactly one photon. As an analogy, imagine a carefully planned treatment from your doctor where you’re supposed to take one daily dose of medicine. With a deterministic source, you get one (and exactly one) dose per day. With a probabilistic source, what you get is intrinsically random: sometimes you get one, sometimes none, and sometimes two. Thankfully, in the Sparrow Quantum labs, we have world-class deterministic single-photon sources.

The blueprint uses complex entangled states of photons, called graph states, for computations. We can show how the photons are connected (entangled) using a graph, which is why these states are called graph states. In the graph representation, each vertex is a single photon and each edge, connecting the vertices, represents entanglement.

To encode a computation, the right graph state needs to be built. The blueprint details two ways that we can make an entangled connection between qubits. The first is by generating them from our single-photon sources. This process is limited to producing only small states, called ‘resource states’, like the linear chain or GHZ state shown in Figure 2. These resource states are not enough to do fault-tolerant quantum computing. The second uses a process called photonic fusion. It starts with the resource states and joins them to create larger graph states, like the synchronous foliated Floquet colour code lattice shown to the right of Figure 2.

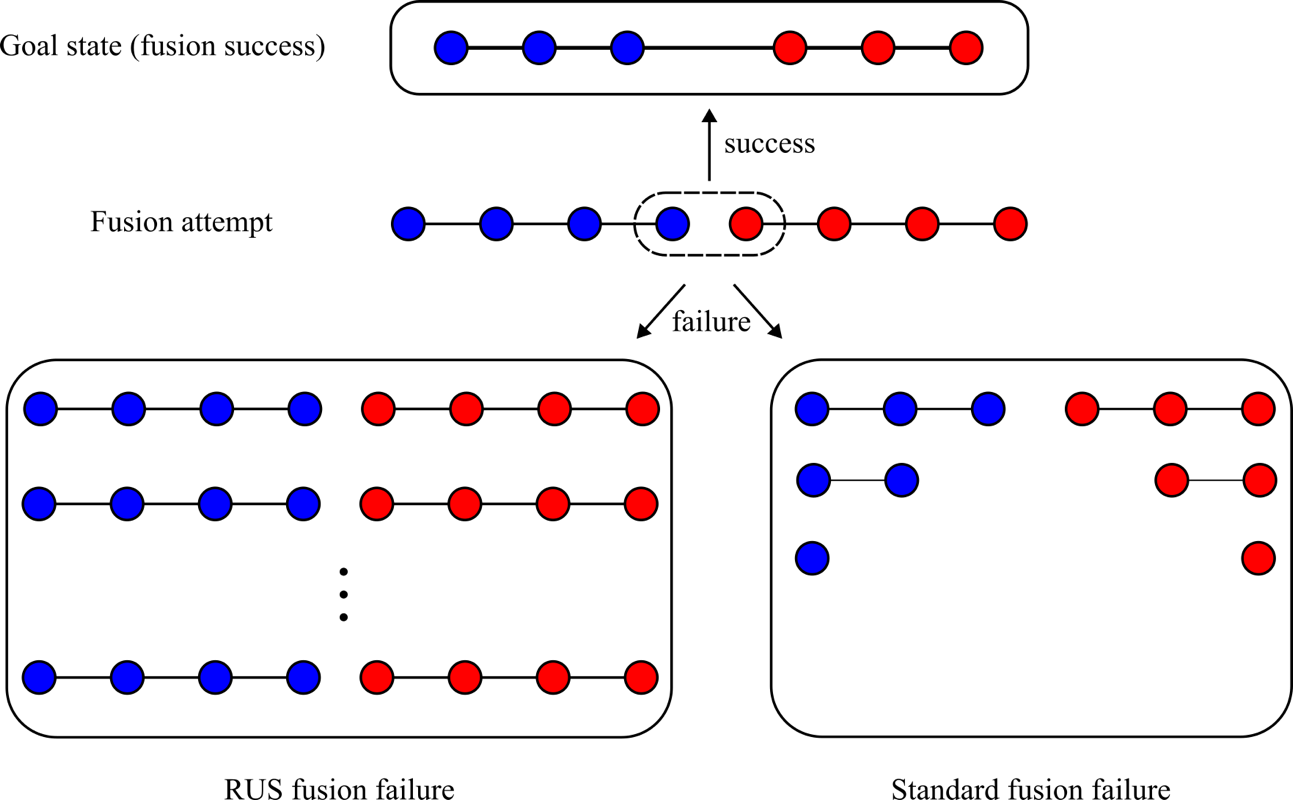

Photonic fusion has a problem: it succeeds probabilistically. When it fails, the two photons are lost. This often means that entirely new resource states need to be made before the fusion can be tried again. However, the blueprint uses a special type of fusion, called repeat-until-success (RUS) fusion.

Suppose you wish to build a large tower using Lego. You might start by building two small towers and then connecting them. Imagine if you had to throw away both towers if you didn't manage to connect them on the first try. This is how you would have to build without RUS fusion. With RUS fusion, you can keep trying until the two bricks are joined, without affecting the rest of the tower. Unlike snapping Lego together, which you could get better at with practice, photonic fusion is inherently probabilistic, having a 50% probability of failing each time. For this reason, being able to try the connections again (RUS fusion) is a huge improvement over standard fusion.

Of course, we should still try to build the simplest graph state that we can. Each way of encoding computations is called a 'code' and uses different numbers of optical components. Having low optical complexity (or optical depth) means that we will have lower loss and a higher number of usable photons. This means we need fewer tries and fewer resources to make a graph state, making them easier to scale. The blueprint uses a clever code (the synchronous foliated Floquet colour code seen in Figure 2) which dramatically reduces the optical depth.

Changing the code also changes how fast the computation runs. It is no good having low optical depth if the computation doesn't work or runs slowly. The scheme works with realistic noise and lossy optical components. Our research team identify and simulate ten different noise mechanisms, allowing us to understand precisely how much error can be tolerated from each source of noise. Our research shows that the scheme in our blueprint has both low optical depth and competitive computational speed.

The blueprint establishes quantum dot sources as serious contenders for quantum computing. Many of the required parameters are already demonstrated by Sparrow Quantum sources. In the blueprint, our research team combine quantum dots with RUS fusion and optimised quantum codes. This results in a system that can overcome the limitations of each individual component. Low optical depths address scalability issues, and error modelling sets realistic performance expectations.

This blueprint is a practical step towards scalable photonic quantum computing. It quantifies what it takes to build a quantum computer, and what concretely needs to be improved. We now know how to build a photonic quantum computer with our multi-photon entanglement sources. That is, there is now a clear path from today’s state-of-the-art experiments to large-scale, fault-tolerant photonic computation.